-

Posts

114 -

Joined

-

Last visited

Content Type

News Articles

Tutorials

Forums

Downloads

Posts posted by Almightygir

-

-

The high poly can be in whatever format you like, that part is irrelevant. But outside of that, it seems correct enough.

Well, with the latest dotXSI exporters which fixed vertex normals... the GLM vertex normals (as displayed in ModView) exactly match the dotXSI and native 3dsMax mesh vertex normals... and the UVs appear unharmed as well.

but do the TANGENTS display correctly?

-

Yeah, i'm pretty sure UE4 does an internal conversion after the FBX has been imported into the engine, because at run-time, FBX is a dick.

edit: This is what we did at Marmoset btw... in fact we did it for all import formats. We had our own internal mesh format that we converted everything to.

-

I for one don't even use Xnormal. I like to keep everything nice and tidy in one software. I bake and texture in Substance Painter and if required I transport those baked maps into Substance Designer to process them, which I usually only do when I used a skewmesh for baking.

I guess could move my worldspace normal maps into Xnormal and convert them to tangentspace maps there - which should get my work in sync, unless I'm missing something, right @@Almightygir?

@@minilogoguy18 It's not just a matter of reexporting. I have to retexture your ST entirely. I might create a proper Highpoly this time and update the UVs for the lowpoly.

it miiiight work. except painter bakes worldspace normals per vertex on the low poly, it doesn't do a high > low worldspace map, which is totally lame and i've complained to Nicolas Wirrman (lead designer for SP) many times about it.

-

Way to skip over my two posts....

No, xNormal doesn not support GLM but someone could make a plugin for it.

Sorry man, what an asshole.

Unfortunately time is the issue for me or i'd jump in and help. I couldn't commit right now as i'm in the middle of doing a masters degree, along with a ton of freelance work. I do wonder though, if Xycaleth has written an FBX>GLM converter, could that in some way be integrated directly into the renderer? So it does an "on the fly" conversion from FBX to GLM at load time. That would likely give you finer control over the final tangents of the GLM file as well, since you can rip them straight from the FBX.

As for any kind of 3ds max plugin, i think Archangel is on to something, just the wrong way round...

xNormal has exporter plugins that support 3dsMax, Photoshop, and Maya that exports XNormal's own mesh format (.SBM). The older version of xNormal (v3.17.16 ...no longer available to download from xNormal.net) supports older versions of 3ds Max (Max7-2013) and Maya (8.5-2013). I will upload it to Utilities shortly. XNormal also supports the dotXSI file format (...but it doesn't like mesh objects without texture coordinates-- meaning you must do an ExportSelected from 3ds Max and omit the bolts/tags and Stupidtriangle_off).

@@Almightygir, @@Psyk0Sith - since XNormal directly supports importing dotXSI mesh files, and we export dotXSI files to be compiled into Ghoul2 models using Carcass... I would recommend that we create the normal maps using the exported High-/Low-Poly meshes in dotXSI format and import the dotXSI files into XNormal for normal map baking... it has options to use exported normals (as written in the dotXSI file). Seems like the best option since Carcass compiles all the dotXSI files into Ghoul2 GLM/GLA, yes (...at least for 3ds Max, Softimage, Maya workflows)?

This is probably the best solution to use. And rather than what DT suggested, of writing a 3ds max tangent basis for xNormal, just use the mikktspace plugin that xNormal uses. There is another plugin option that will need to be enabled (trying to remember where it is, but i'm pretty sure it's in the tangent space calculator, and it says something like "calculate tangents and binormals" or something).

-

The LODs are irrelevant, if your bake came out clean for the highest LOD then it will be fine. The LODs might have some artifacts but it won't blow up your computer. Also, if mikkt syncs with current renderer and .glm then everyone should use Xnormal, if not we can try handplane to get the best sync possible.

does xNormal support GLM format? If so then that's indeed what people should use to bake their normals.

-

Ah i see where your confusion is...

When you bake a normalmap, you're projecting the vertex normals of a high poly mesh, to a per-pixel map on a low poly mesh.

The biggest problem is with exporting a low poly fbx (which yes, is supposed to store tangents) elsewhere to bake, then export that same mesh as a GLM, and hope that the tangents are the same... it sometimes just goes wrong *because*. literally *just because*. Also, you're correct about obj not storing tangents, however just about every piece of software out there calculates tangents for obj files the same way.

-

As long as the mesh tangents on export are not modified in any way by the renderer, i guess that could work.

-

okay, i'll try to simplify further...

in order to bake your normalmaps, you have to either export to fbx, or obj first, because no baker supports GLM. This is mesh "a". Mesh a has a set of tangents that can largely be reliably predicted because the obj format is simple as balls, and fbx is well documented. In either case, we know what the mesh tangents are on export, but we also know they won't be the same as GLM (or can be reasonably sure).

once you've baked, you then have to export to GLM, which has a different set of tangents.

the renderer would be trying to use a normalmap baked with tangents a, on a mesh whos vertices now have tangents b. the math wouldn't add up.

-

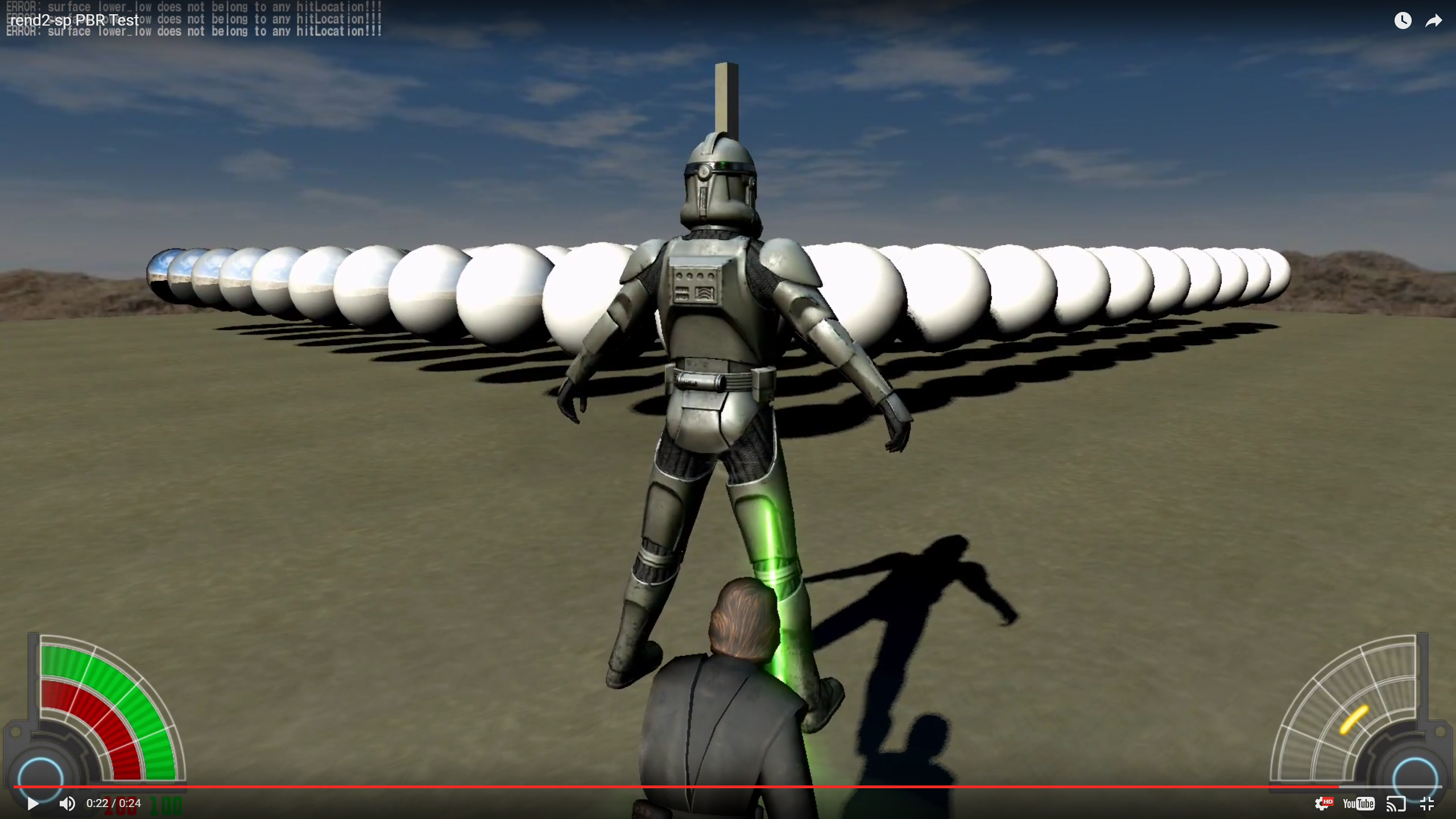

@@Almightygir, @@Xycaleth- what's wrong with the Clone Trooper results @@SomaZ posted in the video? It appears that the normal maps have now been sorted out and fixed for both MD3 and GLM models... this is a huge achievement enabling a PBR workflow in this upgrade effort; things appear to be synced up now in rend2-- good to go now, right?

This image is probably the best way to explain the problem here:

Being aware that this isn't Unity, the problem is largely the same. Because at the time that shot was taken, there were (and still are, because legacy bullshit) many different methods of authoring normal maps for unity, and also the tangents for the meshes. You can see that not only do different bakers calculate tangent space differently, but having the mesh tangents imported and read correctly by both the baker and the renderer is important for a proper/good looking result.

In the turnaround posted earlier:

The most obvious error is on the central plate on the butt, there were a few others i spotted. And while you might say "eh... it's not THAT bad...", it's a problem that will cause artists to pull their hair out over "Y IT LOOK BAD?!" for until the end of time, simply because of a rendering mis-match, they'll always wonder if it's them or the renderer that fucked up. Don't give them that doubt, fix the renderer so they know it's their shitty art work

I'm not trying to belittle the accomplishment, 90% of the work is done... It's time for the last 90% now

-

well, the problem is this:

in order to bake the maps out, you'll have to bake in max/maya/substance/whatever, right? outside of max and maya (which don't use mikkt), nothing will support the GLM format. so you will always be baking with one set of tangents (because obj handles them differently to fbx, and certainly differently to glm), and then rendering with another set of tangents.

BUT, it will look a lot closer than it does now.

-

Well, the tangent calculations should be done by the renderer, but the tangents need to come from somewhere for those calculations to happen. for each vertex you should be able to read a tangent, normal, and binormal.

I'm not sure why it would be so difficult to store tangents in GLM format, you have access to the format, you know how to write it and you know how to read from it. So write in a new boolean which is only activated on exporting if the user desires it to be so, and write the tangent and binormal info into the end of the file. When reading, check for that bool, if it's true, find the new tangent info, if false or not present treat it like an older GLM file. From my own (limited) experience of writing mesh importers, this should be do-able. That said, i don't know the inner workings of the engine, or rather, how organized or disorganized it is.

-

Allow me:

There is no proper normal map rendering workflow for 3ds max. 3ds max isn't even synced to itself... or Stingray, which is hilarious. Maya might be possible. But why sync to either of those when the core/popular baking softwares are using mikktspace?

As for PBR workflows... it's really simple: texture the way you always did, if you're a good texture artist you'll have no problem. All that's really changed about the way things are rendered in PBR compared to legacy methods is that energy is now conserved, so as a surface becomes more reflective, it becomes less diffuse, simples.

Personally, i would suggest scrapping the modification of older formats all-together. leave them in as legacy, so old models will "work". But add FBX support and be done with it. If you're overhauling an entire renderer, at some point you HAVE to draw a line and say "this old shit won't work".

edit:

HAH! i'm referenced in one of those links.

Archangel35757 and minilogoguy18 like this -

Shader is a mess right now. Actually I want to rewrite some parts of it, but first I want to add some other stuff to the renderer. Else we do things twice or trice. I'd love someone else to check the shader. Let's say, I will mention you if the renderer is, let's say, prepared. Right now it uses a wild mix of stuff, like a mixed specular brdf from Renaldas siggraph15 and frostbite. (super gross).

@@DT85 Thank you for the kind words. Besides that. Have you tried compiling your maps with deluxe mapping? This kind of works now. It saves light directions per surface. If there is more than one light affecting the surface, the light direction gets averaged (this looks shitty, but maybe your maps can work like that)

Sure man, i don't check here that often so you're probably better off emailing me if you need to get hold of me:

lee.devonald[at]gmail[dot]com

-

Also, hi Psyk0!

Psyk0Sith likes this -

Well the problem is, you'll want the GLM format to be able to store custom tangents, that's how games engines currently sync normal maps (using the mikkt-space normal format anyway). They do this by storing whatever tangents (either user created, or auto-generated) the mesh has in the fbx file format, this way the baker can bake normals using those exact tangents, and the renderer can render using the same tangents.

If the mesh tangents change at any point in the chain after normal maps are baked, they will never be in sync, and that's what leads to the rendering errors seen above.

The FBX documentation tells you how they store tangent and binormal data (you will need both). With that data stored in the mesh, you can use the info here to make sure that the renderer is synced to substance, knald and toolbag's normalmap bakers: https://github.com/tcoppex/ext-mikktspace

-

-

Good stuff guys.

Looks like tangents for the older models are fucked (funky shading definitely visible), might be worth taking the attitude of: older models load, and work, but look bad... start working in fbx from now on guyzzzz. or something along those lines.

Also, there's something fucky going on with the fresnel term on the spheres in the background, it's all kinds of weird. Let me know if you want me to take a look at the shader code.

Question: how are you handling the importance sampling? in realtime? or using the split-sum equation Epic posted up in 2014?

minilogoguy18 and SomaZ like this -

This game doesn't yet have a good enough Revan -- they all look akward and fat. They weren't easy to do, Im sure, but since when has anyone made

a player model that is perfect since day one? I think the Revan model is yet to be made. I know nothing about Darth Bane so I won't comment on that.

This is how Revan should look like ingame; like a reborn that has been upgraded.

For future reference: These kinds of posts are poison, they are the reason all of the original content creators left.

By all means, dislike the work people do. Feel free to not download a model you don't like, that's your choice. But to say that there is no good version of this character is a lie, there are good versions, you just don't like them.

If you want one that looks like that picture, make it yourself.

And before you come back with all of the age old excuses as to why you can't make it yourself. Please remember that i don't even have a high school equivalent grade in any field of art. I flunked it, i didn't even take the exam. I got where i am now by trying, and failing, and getting better.

Arma3Adict, Lancelot, Psyk0Sith and 6 others like this -

I had just written up a fucking huge post, but i can sum it all up with a TL:DR...

It's not worth my time, or effort. None of this is. None of you, are. Hapslash said everything i ever could, and without swearing like i wanted to

(except like, Minilogoguy, Inyri, Corto, Psyk0sith, you guys can have my time).

-

This thread pisses me off in ways i find difficult to express.

Bek, Arma3Adict, Jeff and 6 others like this -

it's not that it can't do them, it's the way it does them. anyway this is a very in-depth argument that i just don't have the energy for... but there's a reason why maya is the industry standard for animation.

minilogoguy18 likes this -

once you've done your simulation and animation, export to fbx, import to max, export from there.

max is certainly a capable, high end 3d package. it's certainly not a very capable, high end animation suite. not by comparison to maya anyway.

-

i realise most of us (including me) are more comfortable with max. but seriously if you're trying to do any kind of animation sim, or complex rigging or anything. switch to Maya.

-

It's hard for a parent to admit that his bastard son is better looking, a better fighter and has a bigger dick than his marital son. Look what happened to Jon Snow...

Jon Snow is the child of Lianna Stark and Rhaegar Targaryen, not Eddard Stark and some tavern wench. Jon Snow literally has dragons blood.

However, that's pretty much the same thing that happened here, Ned (Autodesk) addopts someone elses son (XSI) as its own before they died. Now we just have to wait for Joffrey (Luxology) to cut off Ned's head

FEATURE: OpenGL 3 Renderer

in Dark Forces II Mod

Posted

you know, you could have asked me that too

store vert data as a struct, add new entry to struct, profit.