-

Posts

114 -

Joined

-

Last visited

Content Type

Profiles

News Articles

Tutorials

Forums

Downloads

Posts posted by Almightygir

-

-

https://discordapp.com/channels/200934142278369281

I pop in from time to time. Though we could use a separate channel to chat.

Oh Arch,

I will make a list, when I got some time. I'm very busy right now. (work and stuff)

That didn't link to anything =[

-

Question:

Do you guys have a discord/slack channel that i can hang out in?

-

But Marmoset won't run JKA animations

toolbag 3 supports animations via geocaching, so while it won't natively run the animations, if you export the mesh + whatever test animation as fbx or alembic, you can view them in toolbag.

that said, viewing animations is to see how a mesh deforms, not how a texture looks. if you want a solid PBR renderer to look at things before exporting to engine, that's what it's for. it's specifically designed to be middleware for pre-vis and lookdev while working in the iteration stages.

oh and because it bakes textures (in realtime!!!!!!!!!!!) it's fucking awesome.

anyway, all i'm saying is: updating a renderer is no trivial thing. and since there is already a previs tool that exists, it's a low priority (in my personal opinion as a member of the public).

-

-

haha awesome dude! now for the fun part: Spherical harmonics

SomaZ likes this

SomaZ likes this -

hey sorry, been at uni all day:

Xycaleth is correct.

-

Since you'd just multiply one with the other in the shader, it makes more sense to do it directly in the texture, to avoid an additional texture call or using a texture channel you could reserve for something else.

-

Here's a really useful doc I found on PBR for artists. It's a bit of a read but I think it covers everything you need to know:

https://docs.google.com/document/d/1Fb9_KgCo0noxROKN4iT8ntTbx913e-t4Wc2nMRWPzNk/edit?usp=sharing

Also these:

https://www.marmoset.co/posts/physically-based-rendering-and-you-can-too/ <--- practical application

https://www.marmoset.co/posts/basic-theory-of-physically-based-rendering/ <--- education on PBR theory (very useful to artists, but can get techy)

https://www.marmoset.co/posts/pbr-texture-conversion/ <--- if you want to (try to) convert older assets to current gen, may be more work required than what's in the doc though.

But they are not the same thing... right? @@Almightygir - what is most industry practice for AO & Cavity maps?

What if AO was in the Blue channel and the Cavity map was in an Alpha channel? I don't know what I'm saying- lol. @@SomaZ - I suggest you just transition all the nomenclature and switch over as Lee suugests now so folks can get used to the new terms. Maybe provide the industry definitions too.

So the frostbyte guys have a "special" type of AO map, where they make the occlusion angles highly accute, which causes much tighter shadows in the map. They're in practice, the same thing... as in, they both affect the renderer the same way. Why do they do it though? Well, an AO map will usually have large, sweeping shadows (think of like... armpits, or between the legs and stuff). Often the lack of ambient light to these regions is enough to create odd looking shading. So by using cavity occlusion instead, they're limiting the lack of ambiance specifically to areas which have really deep geometric variance.

Interestingly, Naughty Dog recently released a paper on Uncharted 4 which can help with this stuff too, by interpolating the baked AO details out at glancing angles. i wrote a blog post on this (have to keep a stupid fucking blog for my masters degree), if anyone wants to read it:

https://leedevonaldma.wordpress.com/2017/02/19/keeping-things-unreal/

The math/pseudocode is:

float FresnelAO = lerp(1.0, BakedAO, saturate(dot(vertexNomalWorldSpace, ViewDir)));

as for nomenclature... It was a jarring switch industry wide, and a lot of people rebelled against it. But it really did make sense to use new terminology (ie: Albedo vs Diffuse, Reflectivity vs Specular) when considering the subtle differences.

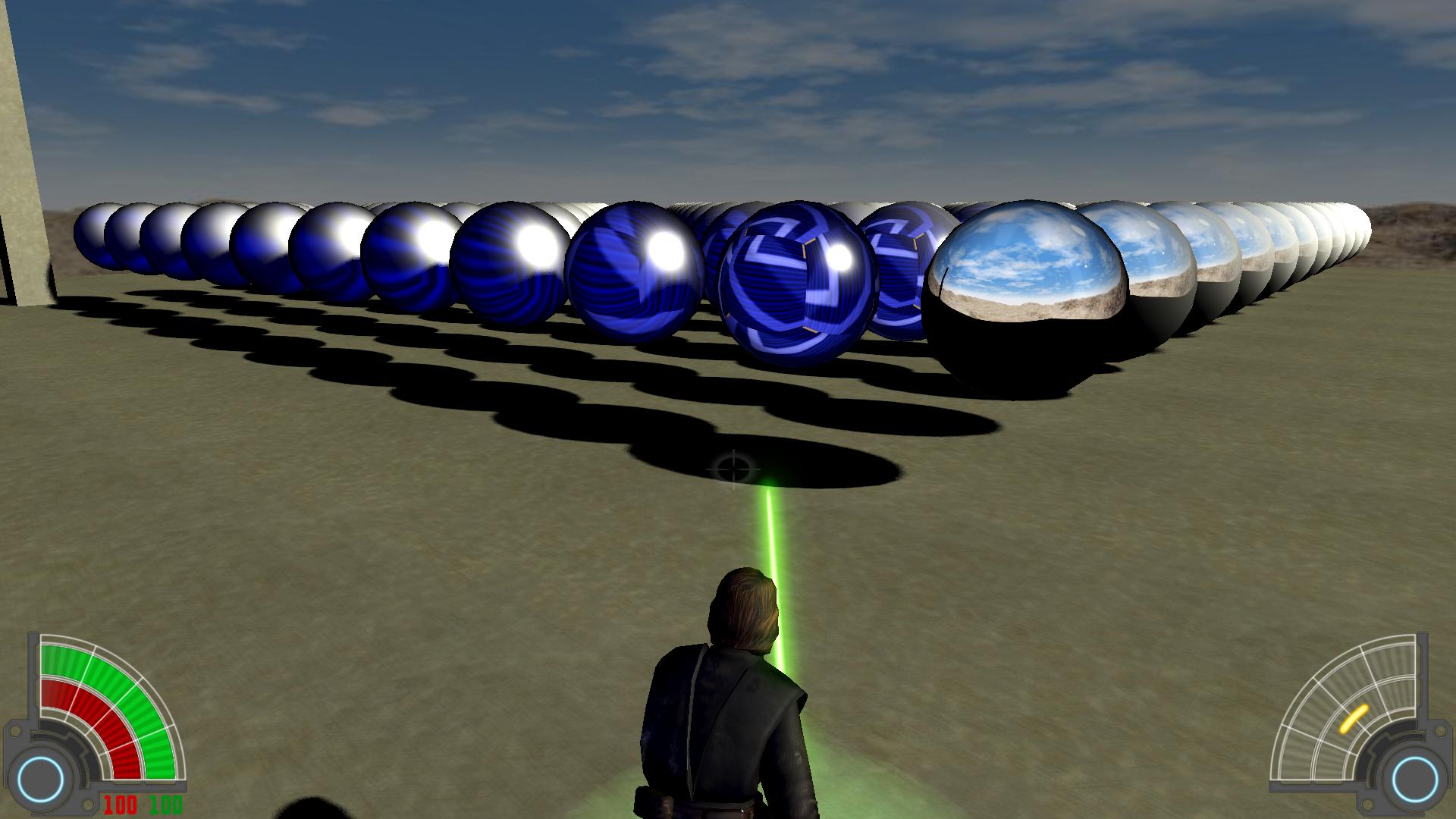

added mip-map convolution with ggx distribution using importance sampling

old standart mip mapping code (simply downscaling the image)

with pre-filtering (1024 samples)

Takes some time to see the differences, for someone whos not knowing, what its supposed to do. But I'm positive that this was the biggest part on getting IBL working in the engine.

Sick!

There's definitely something odd going on with the fresnel component though, it gets really really dark at the edges here, when really it should get closer to pure reflection instead.

eezstreet, SomaZ and minilogoguy18 like this -

AO shall go into the specular map blue channel. Don't apply it to the albedo. We would loose correct specular color for this.

So maps we need for pbr:

Albedo RGBA:

RGB Albedo Color

A Transparency (optional)

Specular RGB

R Roughness Grayscale

G Metal Mask

B Occlusion (Cavity Occlusion, Ambient Occlusion or a combined one, it doesn't matter, just artists choise)

Normal RGBA

RGB Normal Map

A Height Map (optional)

Thats material layout right now. Though Occlusion doesn't get handled right now. Will do this when ready with IBL stuff.

you should rename the Specular RGB map to something else, Reflective or Microsurface would be better. Specular has an actual defined term in current industry usage.

If you're using metal masks (which you are), then reflectivity = lerp(0.04, albedo.rgb, metalmask). if you're using a specular map, then reflectivity == specular.

-

Also, not sure of how your system is set up, but a common method of storing AO at the moment is to put it into the red channel of a packed texture map:

Red = AOG = Roughness

B = Metallic (not sure if this one applies, does your shader system use metalness or specular for reflective input?)

-

So what is to be done about AO? Will we have support for it as its own texture, or can we simply apply it to the albedo?

NO

NO NO NO NO!!!

AO is AMBIENT occlusion. it masks the AMBIENT contribution in the shader pipeline. by applying it directly to the albedo, you're basically sticking a finger up to the PBR system which is specifically designed to give you consistent lighting, by saying "i want permanent shadows in this exact position, fuck where your lights are".

minilogoguy18 likes this -

Ah, okay so it's just a bent normal map, something i (character artist) give no hoots about

SomaZ likes this

SomaZ likes this -

just curious, but wtf is "delux mapping"?

-

It's either tangent or binormals-- not both. If you will look at the dot XSI file in a text editor you will see that it is one set of vectors or the other not both... even though it will erroneously create both xsi_customPset templates. However, the color data represents which ever property you set first.

that's bad.

we need both.

-

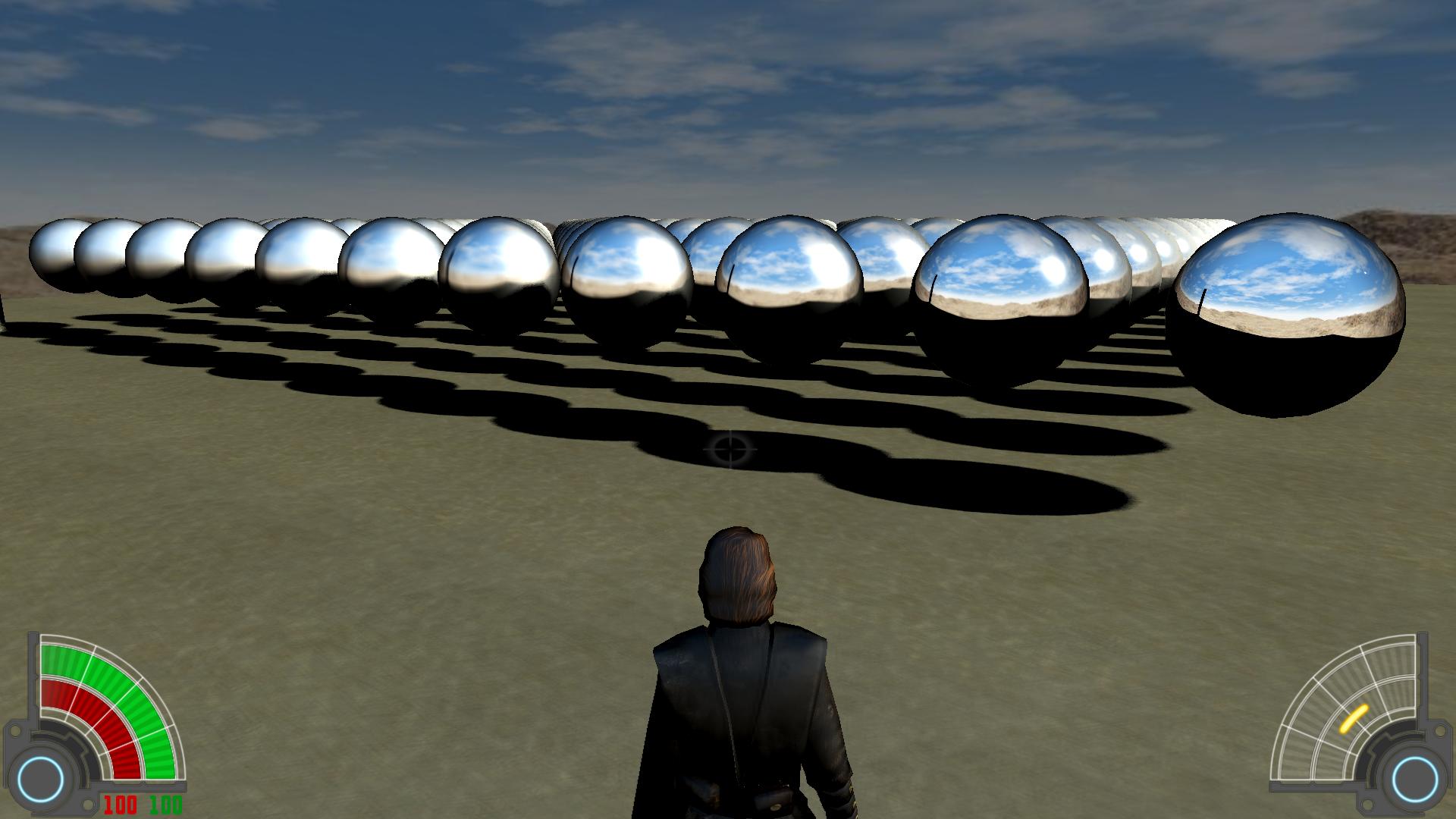

Let's play a little game. What am I working on?

EEEhm, I'm not crazy, no.

You're either working on mip chains, or importance sampling. either of which are crucial to getting PBR IBL correct.

good job

Edit: oops, that was posted yesterday i guess... either way, i'm right >;]

Manually setting the mip-level is kinda what you have to do. it's kinda like... you importance sample across the hemisphere using a crazy mathematical equation to figure out where those samples happen, and the mip level you sample is usually just a divisor of the 8 mip levels by the gloss input.

SomaZ likes this -

my only problem with sticking to .xsi as a format, is that softimage is now non-existent and will be harder and harder to get hold of as various torrenters stop seeding it.

i thought about this some more last night, you're much better off supporting any form of FBX > GLM/GL2 worflow. Unfortunately the support i can offer right now is limited, i'm in the middle of doing a masters degree which is sucking up all of my time. However i finish in september, and i'm more than happy to jump in and help with tools development then, maybe even see if @@Xycaleth's program can be updated to include skeletal mesh outputs? If we can reach that stage, then the entire .xsi workflow will be obsolete.

minilogoguy18 and AshuraDX like this -

So here's an idea that might be better...

@@Xycaleth wrote an fbx > mdl converter, right? So why not modify that to export the new .GTB file containing the tangents and binormals found in the fbx, which can then be read by the importer.

That way, you're no longer relient on xNormal, and instead can use the fbx format for your low poly when baking and texturing in the now industry standard substance tools, marmoset toolbag, or knald baker. And because the .GTB file will contain the tangents and binormals that the fbx had, you can be sure they are synced to the normal map bake as well.

edit: this also breaks reliance on the now depricated .xsi formats in favour of a more widely used current format.

-

Then please tell me how xNormal knows to use tangent basis vectors stored in FBX (or dotXSI) format? Can you take a screenshot of that? Because I just looked thru the options and plugin configuration settings and nothing jumps out at me that says "use mesh tangent data" ...in the Tangent Basis Calculator config settings, I only see a checkbox for "Compute binormal on the fly in the shader."

All I see that might be relevant is the "Ignore per-vertex-color" checkbox in the Hi-Def mesh's section (but you say that's not applicable). And "Match UVs" and "Highpoly normals override tangent space" checkboxes in the Low-Def mesh's section.

xNormal loads FBX files the same way every other piece of software does, FBX stores vertex data as a struct, with multiple vectors as entries in that struct, one of which is tangents, another is binormals. It just accesses each entry in the struct as needed.

You absolutely should NOT store tangents or binormals in vertex colors as those are often capped at half precision.

DT. likes this -

Just to make it absolutely clear to all. the high poly can be any filetype. It doesn't need to be .XSI

Bake came out good Psyk0!

-

When asking "are we going to force a pipeline on the community?", it's prudent to consider that since they've been able to modify the game at all, some 15+ years ago, the pipeline has forced on them.

It's far better to have a forced, yet clearly defined, outlined, and documented pipeline, than to just say "make it how you like".

DT. likes this -

@@minilogoguy18 - I doubt Carcass would use the tangent data stored as COLORs.

Just want to quickly point out that normals, tangents, binormals etc. are all just vectors. and colours in terms of rendering, are also stored as vectors. therefor you can visualize any channel by outputting that vector into a color channel.

Archangel35757 likes this -

I'm confused, why isnt it just a plugin DL of a few MB. I already have Xnormal installed and the latest SDK is also available here

I probably have old Xnormal versions archived somewhere but installing them won't solve the lack of XSI support.

I don't see any options to do that. When you click the mikk tspace plugin the only option you have is to "compute binormal in the pixel shader" Note: You may need to check it depending on your target engine.

You should check that option if you are using stored mesh tangents and binormals (which is what you should be aiming to do). Otherwise it will generate arbitrary tangents and binormals for the mesh and pass those through to the pixel shader. By checking that option it's telling the pixel shader to use the tangents and binormals per mesh vertex.

Yes, I stated this earlier in the discussion. I did a deep-dive into the Wayback Machine... and I found xNormal v3.17.16 and its SDK. I have submitted it to the Utilities section for a permanent home (it's still awaiting approval)... but you can grab it from this link in the short term:

Make sure you do a dotXSI "ExportSelected" and omit all the bolts and Stupidtriangle_off... because it will complain about meshes without textures. I've also written to Santiago and asked if he'd add back support for the dotXSI file format-- no response yet.

xNormal doesn't export tangents. it exports a tangent space map, which was rendered using tangents either stored in the mesh, or generated arbitrarily by xNormal.

This is why it's important to have a synced workflow. If the baker is using arbitrary values when baking the tangent space map, the renderer will never look right.

-

If storing tangents directly in the .GLM file is undesirable, as long as they can be reliably stored in a secondary file (.GTB for example, Ghoul Tangent Binormal) and read by the renderer, i don't see a problem.

@@Xycaleth, seems spot on to me.

Archangel35757, DT. and Xycaleth like this -

@@minilogoguy18 -- It's easy for you to say "...it's no big deal to change GLM importers and exporters and update Carcass..." but this isn't on my ToDo list. The Carcass source code was intrusted to an individual in confidence... and any such major change would likely not go un-noticed; shouting "we have the code" may get that source in trouble (and kill ever getting any further resources)-- so please try to be discreet.

Please take in-game screenshots of @@AshuraDX's clone trooper and point out the normal map flaws (you too @@Almightygir). Everyone was thrilled with the Clone Trooper results until @@Almightygir comes along and says, "...it's good but not perfect."

And we haven't even thoroughly investigated the dotXSI/xNormal-->GLM workflow.

Furthermore, am I correct to say that Normal maps are NOT going to get baked using GLM meshes? Right? Baking would use FBX, OBJ, or XSI, yes? So it's those formats that need to store the tangent basis vectors (FBX already does)... yes? So you would need to copy the tangent basis vectors from the FBX or XSI files and carry that data thru the GLM conversion and write it into the GLM format, right? And like I said earlier Ghoul2 is a half-precision format (based on XSI single-precision format). So how does that compression affect the mesh data?

With ALL the work that DF2 mod has to do... it seems like vanity to pursue this course until the other workflows are shown to be inadequate.

I think i'm confused... you keep referencing the DF2 mod, i thought that was a separate entity to this? Xycaleth seemed to make that assertion anyway.

As for me coming along and saying "it's not perfect". Sorry bro, i'll let you live in your world of rainbows and butterflys. Adding a new field to the GLM format won't affect backward compatibility, the renderer will attempt to read tangents in files that dont have them, it will return null, and it will calculate its own. Worst case scenario, a tangent calculation will need to be added to the renderer to supply meshes which don't contain it, with it.

minilogoguy18 likes this

FEATURE: OpenGL 3 Renderer

in DF2 Mod Development

Posted

perfect. i'll begin trolling immediately.